Twitter is perfect as a megaphone for the far right: its trending topics are easy to game, journalists spend way too much time on the site, and—if you’re lucky—the President of the United States might retweet you.

QAnon, the continuously evolving pro-Trump conspiracy theory, is good at Twitter in the same way as other successful internet-native ideologies—using the platform to manipulate information, attention and distribution all at the same time. On Tuesday, Twitter took a step aimed at limiting how successful QAnon can be there, including taking down about 7,000 accounts that promote the conspiracy, designating QAnon as “coordinated harmful activity,” and preventing related terms from showing up in trending and search results.

“We will permanently suspend accounts Tweeting about these topics that we know are engaged in violations of our multi-account policy, coordinating abuse around individual victims, or are attempting to evade a previous suspension,” Twitter announced. The company added that they’d seen an increase in those activities in recent weeks.

The New York Times reported that Facebook was planning to “take similar steps to limit the reach of QAnon content on its platform” next month, citing two employees of the company who spoke anonymously. On Friday, TikTok blocked several hashtags related to QAnon from search results.

This most recent push to limit QAnon’s reach follows two high-profile campaigns driven by QAnon. First American model and celebrity Chrissy Teigen, who has more than 13 million followers on Twitter, was the target of an intense harassment campaign, then more recently, QAnon accounts were instrumental in spreading a bogus human trafficking conspiracy theory about the furniture marketplace Wayfair. The claims spread from Twitter’s trending bar to Instagram and TikTok accounts promoting the conspiracy theory to their followers.

“That activity has raised the profile of the very long-standing problem of coordinated brigading. That kind of mass harassment has a significant impact on people’s lives,” said Renee DiResta, research manager at the Stanford Internet Observatory and an expert in online disinformation.

But Twitter proficiency is only one small part of why QAnon wields influence, and just one example of how platforms amplify fringe beliefs and harmful activity. To actually stop QAnon, experts say, would take a lot more work and coordination. That is, if it’s even possible.

An omniconspiracy

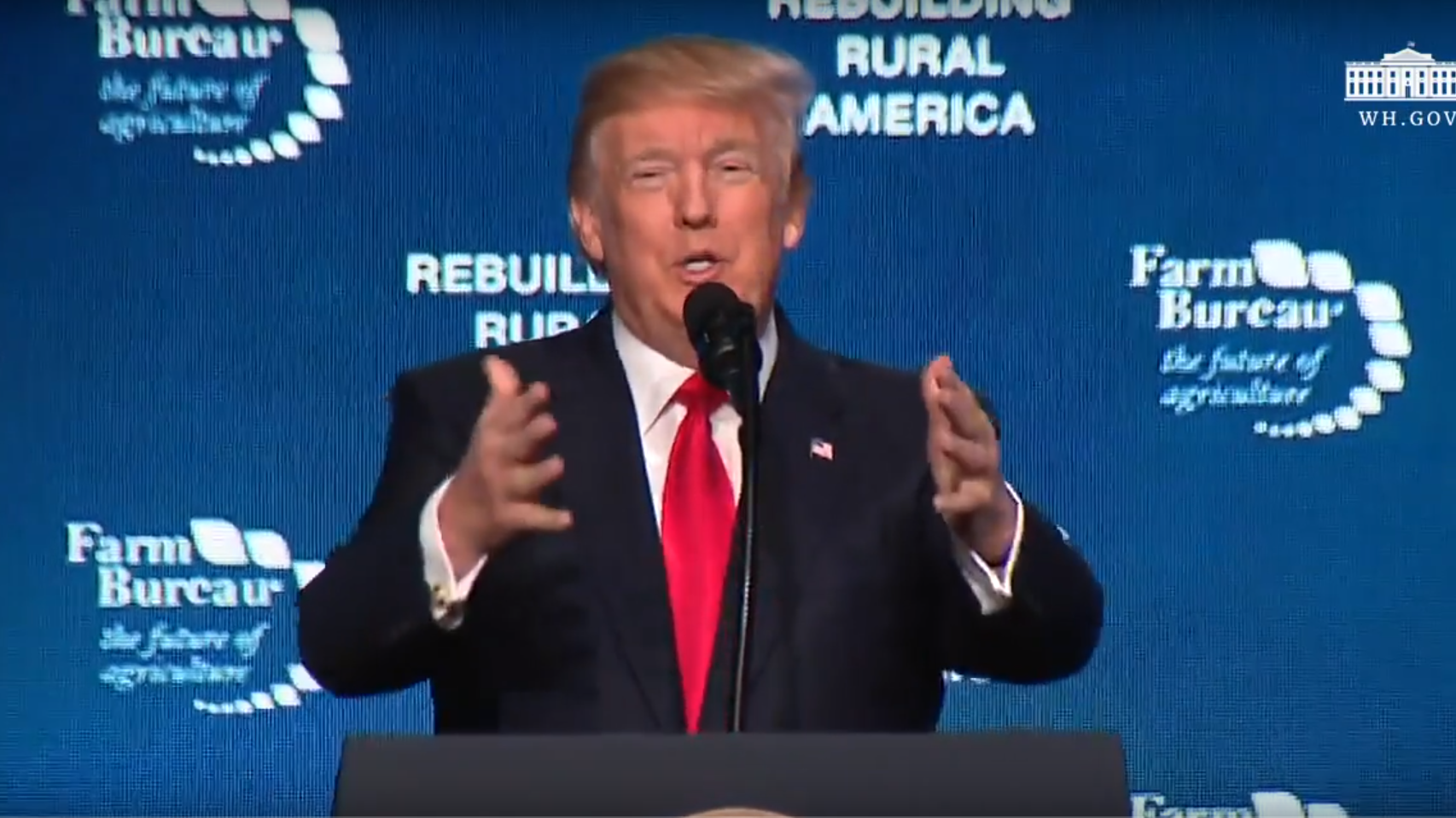

QAnon was born in late 2017 after a quip President Donald Trump made in a press conference about a “calm before the storm” spawned a series of mysterious posts on 4chan attributed to “Q,” predicting the coming arrest of Hillary Clinton. Although that didn’t happen, “Q” continued to post, claiming to know all about a secret plan led by Trump to arrest his enemies.

“QAnon has its origin in a multiplatform conversation that started off on social media, in a pseudonymous environment, where there’s no consequence for speech,” says Brian Friedberg, a senior researcher at the Harvard Shorenstein Center’s Technology and Social Change Project. The posts have moved from one site to another following bans, and now appear on a messageboard called 8kun.

The posts have attracted followers who spend their time interpreting these messages, drawing conclusions, and leading campaigns to make the messages more visible. Some QAnon adherents have led coordinated harassment campaigns against journalists, rival online communities, celebrities, and liberal politicians. Others have shown up at Trump rallies wearing “Q” themed merchandise. The president has retweeted Q or conspiracy theory-related Twitter accounts dozens of times, although it’s an open question how aware he is of what Q is, beyond a movement that supports his presidency on the internet. And there have been multiple incidents of real-life violence linked to QAnon supporters.

The traditional understanding of QAnon was that its ideas are spread by a relatively small number of adherents who are extremely good at manipulating social media for maximum visibility. But the pandemic made that more complicated, as QAnon began merging more profoundly with health misinformation spaces, and rapidly growing its presence on Facebook.

At this point, QAnon has become an omniconspiracy theory, says DiResta—it’s no longer just about some message board posts, but instead a broad movement promoting many different, linked ideas. Researchers know that belief in one conspiracy theory can lead to acceptance of others, and powerful social media recommendation algorithms have essentially turbocharged that process. For instance, DiResta says, research has shown that members of anti-vaccine Facebook groups were seeing recommendations for groups that promoted the Pizzagate conspiracy theory back in 2016.

“The recommendation algorithm appears to have recognized a correlation between users who shared a conviction that the government was concealing a secret truth. The specifics of the secret truth varied,” she says.

Researchers have known for years that different platforms play different roles in coordinated campaigns. People will coordinate in a chat app, message board, or private Facebook group, target their messages (including harassment and abuse) on Twitter, and host videos about the entire thing on YouTube.

In this information ecosystem Twitter functions more like a marketing campaign for QAnon, where content is created to be seen and interacted with by outsiders, while Facebook is a powerhouse for coordination, especially in closed groups.

Reddit used to be a mainstream hub of QAnon activity, until the site started clamping down on it in 2018 for inciting violence and repeated violations of its terms of service. But instead of diminishing its power, QAnon simply shifted to other mainstream social media platforms where they were less likely to be banned.

This all means that when a platform acts on its own to block or reduce the impact of QAnon, it only attacks one part of the problem.

Friedberg said that, to him, it feels as if social media platforms were “waiting for an act of mass violence in order to coordinate” a more aggressive deplatforming effort. But the potential harm of QAnon is already obvious if you stop viewing it as a pro-Trump curiosity and instead see it for what it is: “a distribution mechanism for disinformation of every variety,” Friedberg said, one that adherents are willing to openly promote and identify with, no matter the consequences.

“People can be deprogrammed”

Steven Hassan, a mental health counselor and an expert on cults who escaped from Sun Myung Moon’s Unification Church, known as the “Moonies”, says that discussing groups like QAnon as solely a misinformation or algorithmic problem is not enough.

“I look at QAnon as a cult,” Hassan says. “When you get recruited into a mind control cult, and get indoctrinated into a new belief system…a lot of it is motivated by fear.”

“They’ve had three years of almost unfettered access to develop and expand.”

“People can be deprogrammed from this,” Hassan says. “But the people who are going to be most successful doing this are family members and friends.” People who are already close to a QAnon supporter could be trained to have “multiple interactions over time” with them, to pull them out.

If platforms wanted to seriously address ideologies like QAnon, they’d do much more than they are, he says.

First, Facebook would have to educate users not just on how to spot misinformation, but also how to understand when they are being manipulated by coordinated campaigns. Coordinated pushes on social media are a major factor in QAnon’s growing reach on mainstream platforms, as recently documented by the Guardian, over the past several months. The group has explicitly embraced “information warfare” as a tactic for gaining influence. In May, Facebook removed a small collection of QAnon-affiliated accounts for inauthentic behavior.

And second, Hassan recommends that platforms stop people from descending into algorithmic or recommendation tunnels related to QAnon, and instead feed them with content from people like him, who have survived and escaped from cults—especially from those who got sucked into and climbed out of QAnon.

Friedberg, who has deeply studied the movement, says he believes it is “absolutely” too late for mainstream social media platforms to stop QAnon, although there are some things they could do to, say, limit its adherents’ ability to evangelize on Twitter.

“They’ve had three years of almost unfettered access outside of certain platforms to develop and expand,” Friedberg says. Plus, QAnon supporters have an active relationship with the source of the conspiracy theory, who constantly posts new content to decipher and mentions the social media messages of Q supporters in his posts. Breaking QAnon’s influence would require breaking trust between “Q,” an anonymous figure with no defining characteristics, and their supporters. Considering “Q’s long track record of inaccurate predictions, that’s difficult, and, critical media coverage or deplatforming have yet to really do much on that front. If anything, they only fuel QAnon believers to assume they’re on to something.

The best ideas to limit QAnon would require drastic change and soul searching from the people who run the companies on whose platforms QAnon has thrived. But even this week’s announcements aren’t quite as dramatic as they might seem at first: Twitter clarified that it wouldn’t automatically apply its new policies against politicians who promote QAnon content, including several promoters who are running for office in the US.

And, Friedberg said, QAnon supporters were “poised to test these limitations, and already testing these limitations.” For instance, Twitter banned certain conspiracy-affiliated URLs from being shared, but people already have alternative ones to use.

In the end, actually doing something about that would require “rethinking the entire information ecosystem,” says Diresta. “And I mean that in a far broader sense than just reacting to one conspiracy faction.”

from MIT Technology Review https://ift.tt/2CRGsey

0 comments:

Post a Comment